The computational demands are on a rapid rise across all fields of scientific research. With increased complexity and the data rates of the modern detectors, sophisticated computing systems are rapidly becoming also part of data acquisition and control instrumentation. Online image reconstruction and analysis enables design of complexexperiments. Scientists get an overview of the experiment in real-time and are able to control processes based on visual information either in automatic or manual fashion. Furthermore, at a 10-year horizon we are looking for the detectors generating terabits of data per second. The performance of storage systems fails far behind. This makes it necessary to identify and extract the data of a scientific relevance already during data acquisition phase and perform further data compression whenever is possible.

Computing architectures are changing rapidly and provide an increasing variety of technologies suitable for different applications. Recent advances are characterized by high parallelism and increased heterogeneity of computing architectures as well as a further widening gap between computing power and memory bandwidth. A variety of computing technologies including multi-core CPUs, GPUs, and FPGAs are readily available at most HPC platforms. The computational hardware optimized for machine learning workloads is introduced by all major vendors both as special blocks in recent GPU and FPGA architectures and as stand-alone products. Reduced-precision computations got a significant boost in the context of deep-learning applications and were rapidly adopted across a wide range of performance-critical tasks. This technology is especially relevant for online applications where only as much resolution is required as it necessary to make control decisions with certain confidence.

In order to make full use of the available computational power of a given architecture it becomes even more important to parallelize existing algorithms and fine-tune the implementations for the target hardware architectures. Nowadays, the considered computational model has to handle multiple levels of parallelism. Even in the simplest case, the computational load is first distributed between computing nodes, then, across computational units within each node (GPUs, FPGAs), and, finally, exploit the inherent parallelism of each unit. Advanced applications might use a hidden parallelism available within of some computing units and add another layer of the scheduling. Complex cache hierarchies at each level and communication asymmetries between the units within the same node and across different nodes are further complicate the data flow and scheduling. The best results can only be achieved if task-specific quality requirements, the variety of possible algorithms, and all aspects of the underlying computing platform are considered altogether in a holistic way. Here, the performance modeling and analysis are indispensableinstruments to identify performance bottlenecks which allow to focus efforts on a performance-critical components.

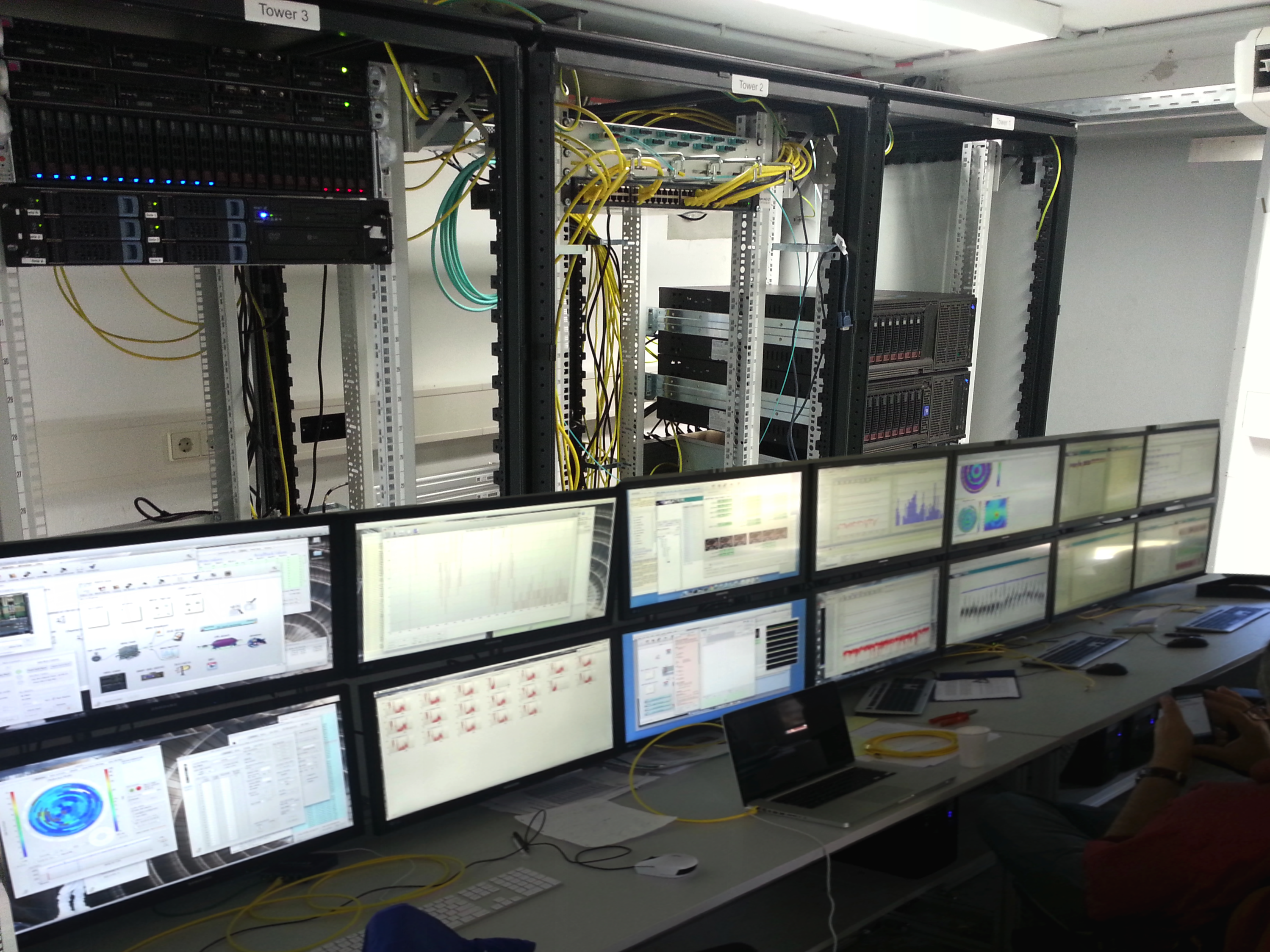

Small research groups are lacking expertise and resources required to develop ever-more sophisticated DAQ systems. We at IPE aim to bridge the gap between detector development groups and computing centers with their HPC clusters. We see IaaS and PaaS infrastructures as core building blocks for a common infrastructure to push data from the detector directly into the local computing center and rely on HPC resources for online data processing and reduction. We develop technologies to organize fast and reliable data flow between detector and HPC infrastructure. We also design full scale control and data acquisition systems including both hardware and software components and taking utmost care to optimize software for the selected hardware configuration.

Our Competences

At IPE we have a broad range of competences in algorithms, computing models, hardware architectures, and communication protocols. We operate a development cluster equipped with several generations of GPUs from AMD and NVIDIA and two types of fast interconnects based on Infiniband and Ethernet technologies. FPGAs from Xilinx and Altera are available as well. The main areas of research include:

- Parallel Algorithms

- Linear algebra

- X-ray tomography

- Ultrasound tomography

- Particle image velocimetry

- High-energy particle physics

- High performance computing for online applications

- Heterogeneous computing with CPUs, GPUs, FPGAs, and custom accelerators

- Reduced-precision computations and hardware-aware techniques

- Parallel programming with CUDA and OpenCL

- Multi-GPU and distributed computing with NVIDIA GPUDirect and AMD DirectGMA

- Benchmarking, performance analysis, and software optimization

- High-bandwidth data acquisition and low-latency control

- Linux kernel development, PCI drivers, Scatter-gather DMA engines, etc.

- High-speed distributed storage with iSER, DRBD, and Linux AIO

- Distributed file-systems for data-intensive workloads (GlusterFS, CePH, BeeGFS)

- Low-latency Infiniband and Ethernet networking using RDMA and ROCe technologies

- Slow control platforms from EPICS and National Instruments

- Data Intensive Cloud

- IaaS (VMWare, oVirt, and KVM) and PaaS (OpenShift/Kubernetes) cloud infrastructure for data intensive applications

- Optimized communication of CRI-O containers within Kubernetes infrastructure

- Scientific Workflow Engines for cloud environment

- HPC and database workloads in cloud environment

- Desktop image analysis applications in cloud environment

Focus of our applications is online data-processing, control, and monitoring. One prominent example is fast imaging algorithms for X-ray and ultra-sound tomography. Within the frameworks of UFO project, our technology allowed to build a novel beamline for high-throughput and time-resolved tomography with online monitoring and image-driven control loop. Another our flagship project is a GPU-based prototype of L1 track trigger for CMS experiment. We also have applications in material science and fluid mechanics.

Our technologies

- DAQ for high-resolution image sensors

- DAQ for high energy physics

- DAQ for KATRIN

- DAQ for superconducting sensors

- DAQ for Tristan

- GPU Computing

- Medical image processing

- Measuring and control electronics for Katrin

- Neural networks for accelerator physics

- Slow control and data management

- Terabit/s Optical data transmission

- Ultrasound computed tomography

- Ultrasound therapy systems

- Ultrafast X-ray imaging

For Students

We can offer a variety of research topics for candidates interested in high performance computing, performance analysis, and optimization. We continuously propose internship on “software optimization”. Frequently, we also offer more specific topics for Master thesis within ongoing projects.

We generally expect prior exposure in parallel programming. We will be happy to welcome you at IPE if you are proficient in C and have some experience in CUDA/OpenCL or/and multi-threaded programming. If you have the required experience and are able to work independently, we are also flexible to adapt topics for your interests and even might sketch a new topic within domain of high-performance computing.